Setting up a custom robots.txt file is among the easiest and most effective ways to optimize your blog for search engines. While managing robots.txt goes rather smoothly in systems such as WordPress by using plugins, setting it up on Blogger- Google’s free blogging platform- requires manual action.

Read More: How to Optimize Your Blogger Sitemap for SEO

This post will explain what a robots.txt file does, why it is important to Blogger SEO, and how you can customize it to improve your blog’s ranking in search engines.

What is a Robots.txt File?

A robots.txt file is a simple text file placed in the root directory of your site that communicates to search engine crawlers-also known as robots-how to crawl and index your website. You can indicate by this file the crawling behavior, and what ought to or should not be indexed on your site.

In simple words, the robots.txt file is a pointer that lets search engines know how to navigate through your content. In addition to that, the ability to set a custom robots.txt in Blogger goes a long way toward increasing its SEO as it gives the capability to prevent indexing and avoid duplicate content problems.

Why is Customizing Robots.txt Important for Blogger?

Blogger, by default, will create a standard robots.txt file but that is quite limited on the level of control you can have over the crawler of search engines through this file. Customizing it makes you acquire several beneficial features, including:

- Control Over Indexing: A custom

robots.txtfile lets you specify which pages or directories to index or ignore, which is essential for optimizing SEO. - Prevents Duplicate Content: Blogger generates URLs for multiple archive pages (by date, category, etc.), which can lead to duplicate content issues. By controlling which pages are crawled, you can prevent duplicate indexing and potential SEO penalties.

- Improves Crawling Efficiency: Search engines have a “crawl budget,” or a limited number of pages they can crawl on your site. A custom

robots.txtfile ensures that search engines prioritize your most important pages, like your posts, while ignoring less relevant ones. - Enhances User Privacy: Sometimes, you may want to exclude certain pages, like search results or admin panels, from being indexed. A custom

robots.txtfile can ensure these areas remain hidden from search engines.

How to Enable Custom Robots.txt in Blogger

Blogger makes it easy to add a custom robots.txt file. Follow these steps to enable and configure it:

- Log into Blogger: Go to your Blogger dashboard.

- Navigate to Settings: In the left-hand sidebar, click on Settings.

- Find Crawlers and Indexing: Scroll down to the “Crawlers and Indexing” section.

- Enable Custom Robots.txt: Toggle the “Enable custom robots.txt” setting to On.

- Enter Your Custom Code: After enabling the option, a box will appear for you to enter your custom

robots.txtcode.

Once enabled, Blogger will use this custom robots.txt file to guide search engines.

Basic Structure of a Custom Robots.txt

The basic structure of a robots.txt file includes the following components:

- User-agent: This directive specifies which search engine crawlers the rule applies to. “User-agent: *” applies to all crawlers, while specifying “User-agent: Googlebot” would apply the rule only to Google’s crawler.

- Disallow: This directive tells crawlers which pages or directories should not be indexed. For example, “Disallow: /search” prevents crawlers from indexing your blog’s search results.

- Allow: This directive is used to allow specific pages or subdirectories to be indexed.

- Sitemap: Including a link to your sitemap here can help search engines find all your indexed pages more efficiently.

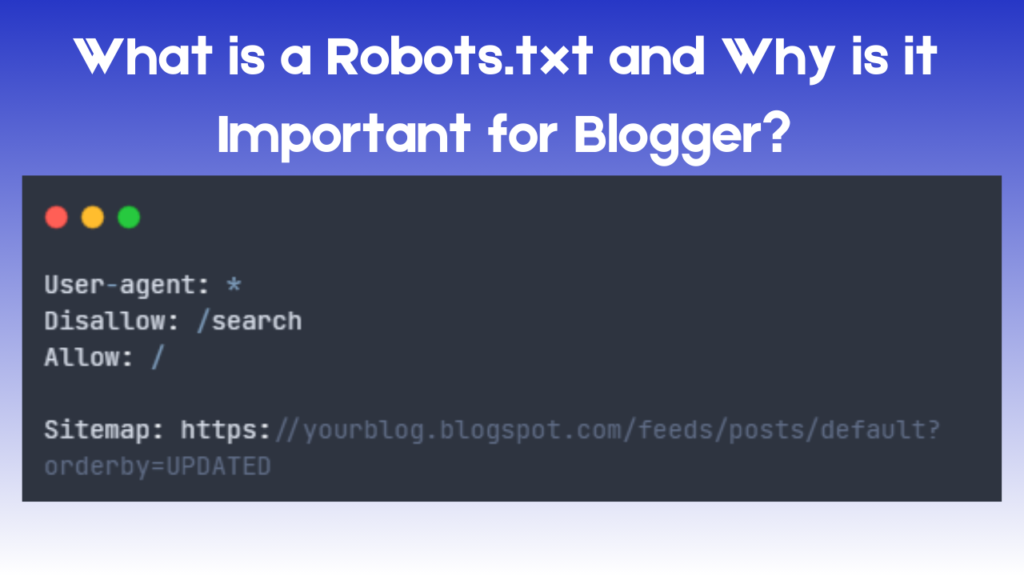

Here’s a basic example of a robots.txt file for Blogger:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://yourblog.blogspot.com/sitemap.xmlReplace “yourblog” with your blog’s actual name. This configuration blocks search engines from indexing the search results pages (/search) while allowing access to all other content. It also includes the sitemap URL, making it easier for search engines to find and index your posts.

Custom Robots.txt Code for Blogger

The custom robots.txt code for Blogger can vary depending on the size and structure of your blog. Here are a few code examples tailored to different needs:

1. Basic Custom Robots.txt for Small Blogs

If your blog is small (under 100 posts), a basic configuration is typically enough:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://yourblog.blogspot.com/feeds/posts/default?orderby=UPDATEDThis code tells search engines to ignore search result pages but to index other areas of your blog, using an XML sitemap link to ensure full indexing.

2. Advanced Custom Robots.txt for Larger Blogs

For larger blogs with multiple categories and archives, you may want to block archive pages as well to prevent duplicate content:

User-agent: *

Disallow: /search

Disallow: /archive

Allow: /

Sitemap: https://yourblog.blogspot.com/feeds/posts/default?orderby=UPDATEDThis configuration excludes archive pages and search results, helping to avoid duplicate indexing and ensuring crawlers focus on new and relevant content.

3. Custom Robots.txt for Better Content Focus

If you have specific content you don’t want to be crawled (such as a specific post or page), you can disallow it by specifying its URL:

User-agent: *

Disallow: /search

Disallow: /p/yourpage.html

Allow: /

Sitemap: https://yourblog.blogspot.com/feeds/posts/default?orderby=UPDATEDReplace /p/yourpage.html with the specific URL path of the page or post you want to exclude from indexing.

Submitting Your Blogger Robots.txt to Google Search Console

Once you’ve configured your custom robots.txt file, it’s essential to ensure Google acknowledges these settings by submitting them to Google Search Console:

- Go to Google Search Console: Log in to Google Search Console using your Google account.

- Add Your Blog (if not already added): If this is your first time, add your Blogger blog by entering its URL.

- Use the URL Inspection Tool: After adding your blog, go to the URL Inspection tool to test your custom

robots.txtfile. - Submit Sitemap: If you haven’t already, submit your sitemap URL (listed in your

robots.txt) under the Sitemaps section of Google Search Console.

This step confirms that Google is using your custom robots.txt settings and sitemap to understand and crawl your content effectively.

Tips for Optimizing Your Custom Robots.txt for Blogger

Creating a custom robots.txt file is a great start, but to fully optimize it, consider the following tips:

- Keep the File Simple: Avoid excessive Disallow or Allow rules, which can complicate crawling. Stick to only what’s necessary to achieve your SEO goals.

- Exclude Low-Value Pages: Pages like search results, category archives, and duplicate URLs dilute SEO and should be excluded from indexing.

- Update Robots.txt as Needed: If you add new sections to your blog or restructure content, revisit your

robots.txtfile to make necessary adjustments. - Monitor Crawl Errors in Google Search Console: Regularly check Google Search Console for crawl errors or warnings related to your

robots.txtsettings.

Common Mistakes to Avoid with Robots.txt in Blogger

Using robots.txt incorrectly can harm your SEO instead of helping it. Here are a few common mistakes to avoid:

- Blocking Essential Pages: Ensure you’re not inadvertently blocking pages you want to rank in search engines. Double-check the URLs and paths in your

Disallowdirectives. - Ignoring Crawl Budget: Larger blogs with frequent content updates should manage their crawl budget by excluding unimportant pages to ensure search engines focus on high-value content.

- Forgetting to Update Sitemap Links: Always keep the sitemap URL in your

robots.txtfile up-to-date, especially if you switch from a default to a custom sitemap.

Conclusion

Customize the robots.txt file for your Blogger blog and have a positive effect on your SEO by instructing the search engines well about what to index. As you go through good practices in this guide, you will be able to facilitate the visibility of your blog, manage duplicate content, and streamline crawling to improve your blog’s overall performance in search results.

You are highly encouraged to revisit settings more regularly in Google Search Console to identify better indexing and crawl efficiency, which can enable you to get the most out of your custom robots.txt configuration.